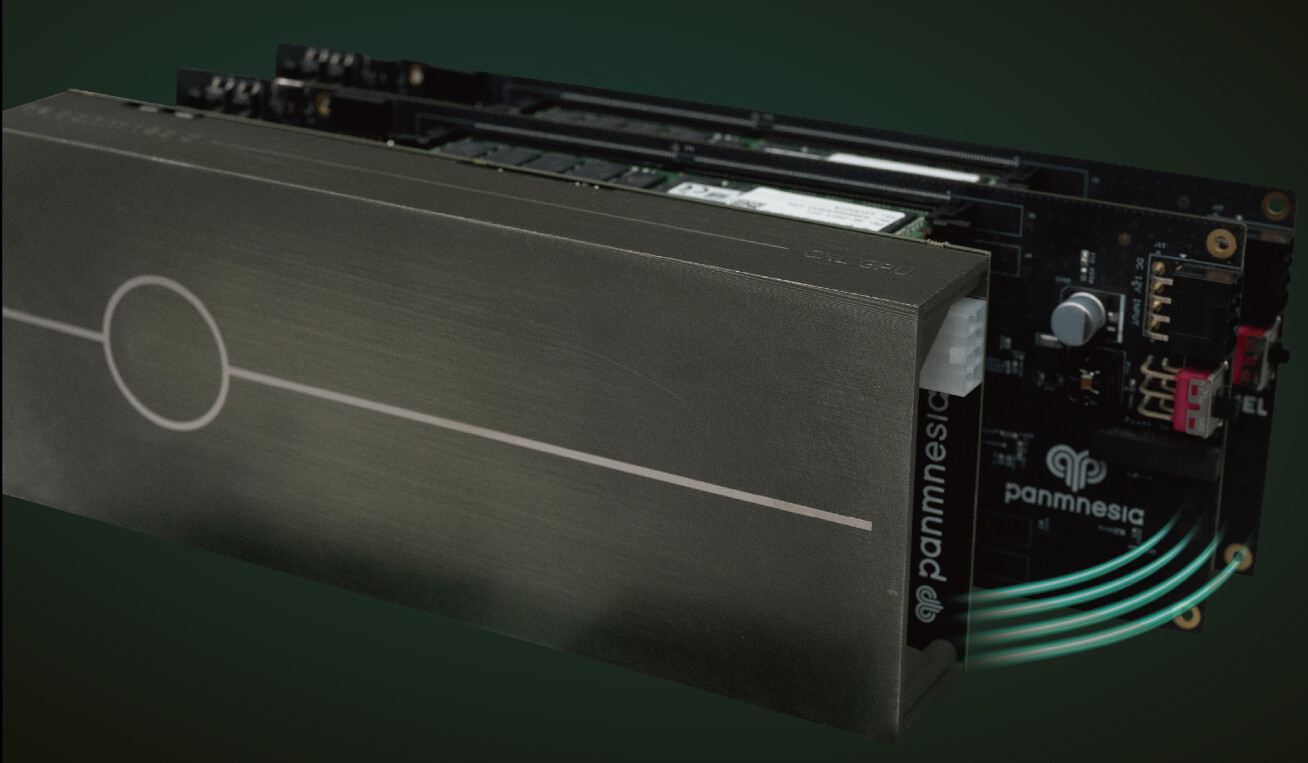

Panmnesia's Innovative Solution for GPU Memory Limitations

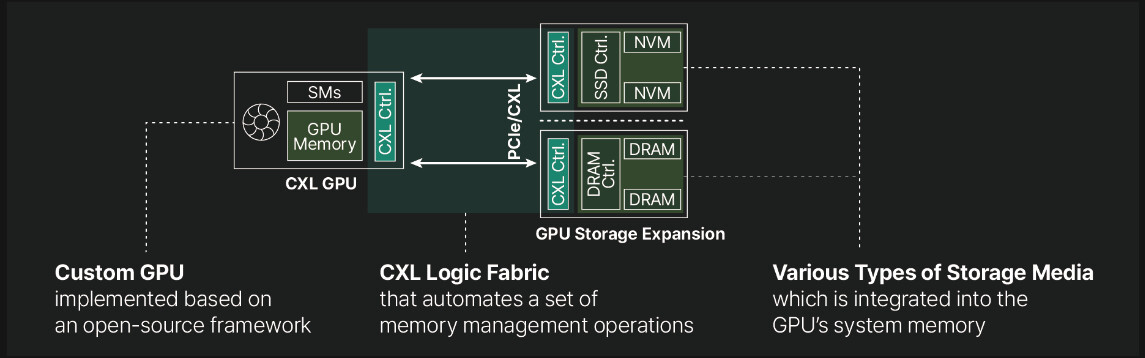

South Korean startup Panmnesia has introduced a unique solution to tackle the memory constraints of modern GPUs. The company has created a low-latency Compute Express Link (CXL) IP that could potentially expand GPU memory using an external add-in card. Current GPU-accelerated applications in AI and HPC face limitations due to the fixed amount of memory within GPUs. As data sizes continue to grow exponentially, GPU networks must constantly increase in size to accommodate applications in local memory, which impacts latency and token generation. Panmnesia's proposed solution utilizes the CXL protocol to enhance GPU memory capacity by connecting PCIe-connected DRAM or SSDs. The company has successfully overcome technical challenges, such as the absence of CXL logic fabric in GPUs and the constraints of existing unified virtual memory (UVM) systems.

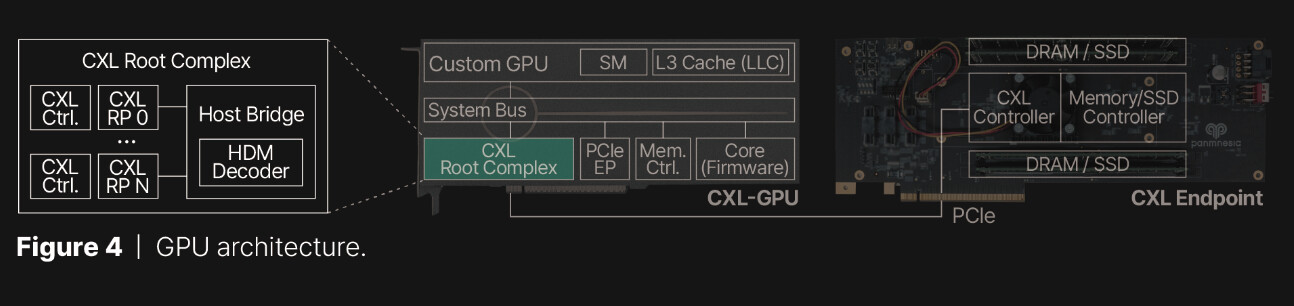

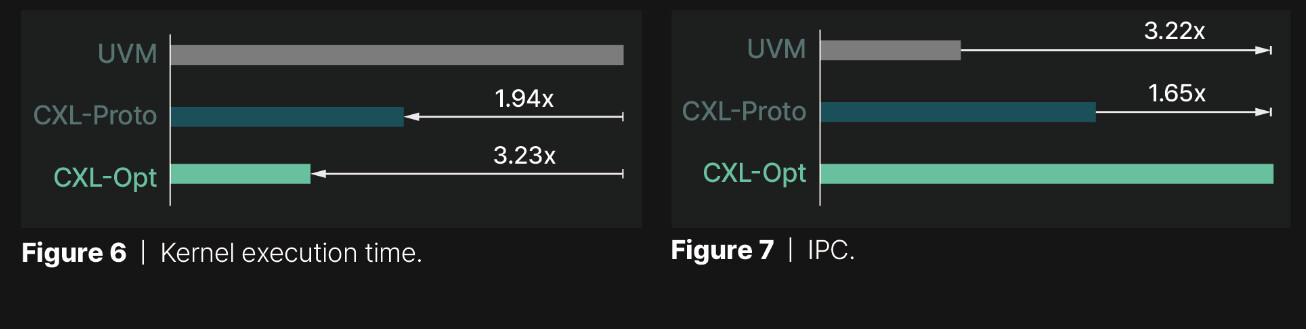

At the core of Panmnesia's solution is a CXL 3.1-compliant root complex with multiple root ports and a host bridge featuring a host-managed device memory (HDM) decoder. This advanced system effectively tricks the GPU's memory subsystem into recognizing PCIe-connected memory as native system memory. Extensive testing has shown promising results. Panmnesia's CXL solution, CXL-Opt, achieved round-trip latency in the double-digit nanoseconds, surpassing both UVM and earlier CXL prototypes. In GPU kernel execution tests, CXL-Opt demonstrated execution times up to 3.22 times faster than UVM. While older CXL memory extenders had approximately 250 nanoseconds round trip latency, CXL-Opt could potentially achieve less than 80 nanoseconds. Although memory pools typically add latency and degrade performance, these CXL extenders may also impact cost. However, the Panmnesia CXL-Opt could have practical applications, and it remains to be seen if organizations will adopt this technology in their infrastructure.

Benchmarks by Panmnesia

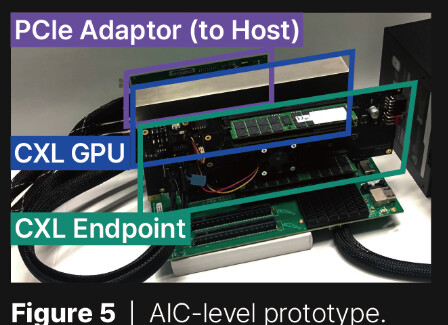

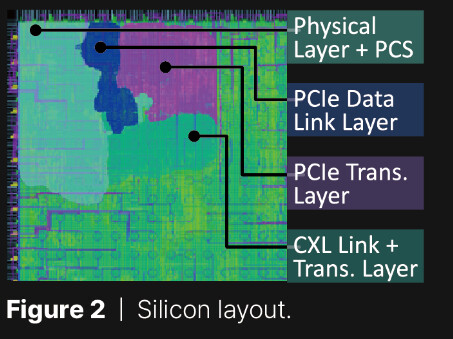

Below are some benchmarks provided by Panmnesia, as well as the architecture of the CXL-Opt: